-

PDF

- Split View

-

Views

-

Cite

Cite

Shelagh A. Mulvaney, Leonard Bickman, Nunzia B. Giuse, E. Warren Lambert, Nila A. Sathe, Rebecca N. Jerome, A Randomized Effectiveness Trial of a Clinical Informatics Consult Service: Impact on Evidence-based Decision-making and Knowledge Implementation, Journal of the American Medical Informatics Association, Volume 15, Issue 2, March 2008, Pages 203–211, https://doi.org/10.1197/jamia.M2461

Close - Share Icon Share

Abstract

Objective: To determine the effectiveness of providing synthesized research evidence to inform patient care practices via an evidence based informatics program, the Clinical Informatics Consult Service (CICS).

Design: Consults were randomly assigned to one of two conditions: CICS Provided, in which clinicians received synthesized information from the biomedical literature addressing the consult question or No CICS Provided, in which no information was provided.

Measurement: Outcomes were measured via online post-consult forms that assessed consult purpose, actual and potential impact, satisfaction, time spent searching, and other variables.

Results: Two hundred twenty six consults were made during the 19-month study period. Clinicians primarily made requests in order to update themselves (65.0%, 147/226) and were satisfied with the service results (Mean 4.52 of possible 5.0, SD 0.94). Intention to treat (ITT) analyses showed that consults in the CICS Provided condition had a greater actual and potential impact on clinical actions and clinician satisfaction than No CICS consults. Evidence provided by the service primarily impacted the use of a new or different treatment (OR 8.19 95% CI 1.04–64.00). Reasons for no or little impact included a lack of evidence addressing the issue or that the clinician was already implementing the practices indicated by the evidence.

Conclusions: Clinical decision-making, particularly regarding treatment issues, was statistically significantly impacted by the service. Programs such as the CICS may provide an effective tool for facilitating the integration of research evidence into the management of complex patient care and may foster clinicians' engagement with the biomedical literature.

Introduction

Few studies have carefully assessed clinical impact of evidence based medicine consultation (“informationist”) services. Two reviews of clinical medical librarianship (CML) interventions pointed out the considerable challenges in measuring outcomes related to clinical practice parameters and patients' health.1 Previous evaluation studies used methods that could not support causal inferences about program effects.2 The current study evaluated impact of clinical evidence summaries provided by the Vanderbilt Clinical Informatics Consult Service (CICS) to intensive care unit (ICU) based clinicians. As the program had already been implemented in the hospital, the study utilized a randomized, controlled design to determine CICS effectiveness related to clinicians' decision-making and patient care. The study determined what clinical situations prompted consults; the quantity of consultative evidence provided and the magnitude and form of its clinical impact; barriers that prevented implementation of the evidence; time clinicians spent searching for case-related evidence, with and without the CICS service; use of colleagues for evidence-related consultations; and how clinicians used and perceived the CICS.

Clinicians' adherence to practice guidelines is a salient and well-studied aspect of evidence based care delivery.3–7 In the last 10 years, MEDLINE has indexed over 250 reviews of adherence to clinical practice guidelines and 90 randomized guideline implementation trials across medical specialties. Another important, but less well studied, process associated with evidence based medicine involves elucidation of how, in the absence of established guidelines, clinicians seek out and use evidence in everyday patient care. Studies document barriers to introducing research-derived evidence into practice. Such barriers include: scarcity of time required to find and review research;8–10,11 lack of skills needed to evaluate evidence,8,9 lack of skills needed to synthesize evidence;12 impediments imposed by organizational structure;5 non-conducive clinician attitudes;3 and the difficulty of establishing relevance of published evidence to a particular patient.13 To promote and enhance evidence based practices, experts have recommended interventions that focus on specific workflow-associated barriers and facilitators.7 Such projects involve several approaches: computer-generated, evidence based decision support;14,15 evidence based reminders,16,17 evidence based feedback,18,19 and improved access to evidence.20

For decades, medical librarians have sought and developed better means to integrate information into patient care. One familiar knowledge-management prototype is the clinical medical librarianship (CML) model, which has been successful in expanding librarians' roles to include service as information consultants for patient care.21–23 Recent interest by information professionals in identifying and resolving unanswered information needs led to their deployment as “clinical informationists,” individuals with expertise in both information seeking and appraisal and a high level of domain knowledge who participate as professional members of health care teams. Informationists work at the intersection of knowledge and action, and as expert information providers, identify and address the complex information needs of the clinical team.24–30

In 1996, the Eskind Biomedical Library (EBL) at Vanderbilt University Medical Center initiated the Clinical Informatics Consult Service (CICS), an early “informationist” model. The primary mission of the CICS is to facilitate access to and application of research results in clinical practice. The service provides direct consultative input to multiple specialty-based patient care teams and clinics within the Medical Center.31–34 Since its inception, the CICS has aimed to overcome two important barriers to achieving evidence based practice. First, the CICS seeks to address well-documented reports that clinicians do not have time to search for and summarize evidence related to their daily practices.8–11 Second, the CICS provides advice for the complex or unique patient for whom treatment guidelines do not exist and for whom evidence-informed care may require intensive searching and synthesis of the medical literature. Answering questions for which there is no clear consensus in the literature can be time consuming. Yet, care delivery may be compromised by neglecting to locate or examine possible treatment options.

The CICS program model works through 1) specialized training for each participating librarian in research methods and statistics, with additional training in the medical content areas of future consultations (e.g., neonatal care). Training is highly standardized, and participating librarians must successfully pass peer and/or clinician-led verification/calibration sessions to guarantee a core level of competence. 2) integration of CICS librarians within clinical care teams to field (and observe or deduce the need for) consultation requests related to patient care questions. 3) provision of search strategies, relevant articles, and research syntheses in response to clinical consult questions—tailored to fit the patient at hand using specialized knowledge gained through participation as a member of the clinical care team. In answering questions, CICS librarians help to focus the question at its inception, thoroughly search the research literature, and filter the full text of the literature based on quality concerns to aid in assimilation of evidence into clinical practice. Ultimately, the CICS provides the patient care team with an oral and written synthesis and critique of relevant research.

Methods

Authorization for Study

Prior to initiating the study, authors obtained human subjects approval from the Vanderbilt Institutional Review Board. As an exempt study evaluating an educational program, consent forms were not required from patients or clinicians. Through email to clinicians, project staff handing out explanatory cards during clinical rounds, and focused meetings with teams on each participating study unit, eligible clinicians obtained notification about the study and its randomization processes. Administrative and clinical leaders of each unit authorized the procedures for randomization and missing data prevention.

Study Site

Vanderbilt University Hospital is a 658-bed, academic, tertiary care facility in Nashville, Tennessee that provides adult and pediatric care to local and referral populations. Based on established acceptance of the CICS service on individual patient care units, a similar level of experience providing CICS among the unit librarians, a stable history of use of the CICS service in those units, and the manner in which the CICS service had been used for patient-oriented questions, project staff selected four Vanderbilt University Hospital critical care units to participate in the study. For example, the Emergency Department was not included because it primarily uses the CICS for educational purposes (training residents or fellows), rather than patient-critical issues. The 35-bed Pediatric Intensive Care Unit (PICU), the 26-bed Medical Intensive Care Unit (MICU), the 60-bed Neonatal Intensive Care Unit (NICU), and the 31-bed Trauma Unit (TICU) were initially included as study units. However, the PICU was eliminated from the study after 9 consults because of a shortage of available CICS, PICU-trained personnel within the Eskind Library system.

Clinical Informatics Consult Service (CICS)

During the study, one specifically assigned CICS librarian on each study unit participated in clinical rounds three times per week with the critical care team. That individual completed the majority of the unit's CICS consult requests, which were prioritized at their initiation by the requesting clinician: high (response required within 0.5 days), medium (3 days), or low (7 days). Priority time frames were established through consulting experienced CICS librarians and clinicians to establish a consensus optimal response time prior to the study's initiation. Each consultation response included a documented bibliographic search strategy with corresponding references, a targeted list of full-text articles, and a written synthesis and critique of the relevant research materials. Typically, the CICS librarian shared this summary with the team verbally during a subsequent rounding session. For future reference by library staff and clinicians, CICS staff posted the consult summaries and related articles to the library's intranet, web-based evidence repository.

Randomization Procedures

Clinicians could, upon request to the rounding librarian, opt-out of the randomization process on a consult-by-consult basis if the evidence was deemed clinically critical and needed immediately, and if no resources were available to complete a search. Clinicians were immediately paged and emailed notification regarding the condition to which their consult had been randomized. Again, clinicians could opt out of the randomization at this point. Librarians entered the question received, unit, requestor, date of receipt, and priority level into a consult request database. Stratified by consult priority level, questions were immediately and automatically randomized via the computerized database to either the “CICS Provided” or “No CICS Provided” condition using a random number generator. For the “CICS Provided” condition, clinicians received typical consult results, whereas for the “No CICS Provided” condition, clinicians did not receive consult results. Clinicians in the Trauma Unit requested no paging within the study and only received the email. Because this was an effectiveness trial, regardless of the randomization status of a CICS consultation request, clinicians could conduct their own literature searches and reviews.

Consult Rating Procedures

Clinicians completed both a brief pre-consult request form and a post-consult evaluation form. The pre-consult forms were completed on paper by the clinician, acting either alone or aided by the CICS librarian. This pre-consult form included a standardized checklist of reasons for a clinical consultation request (e.g. update my knowledge) and a standardized checklist of clinical actions that consult results might influence (e.g. change drug dosage); participants could add additional reasons or actions if needed. Post-consult forms were emailed to clinicians 3 days after consult results became available, with the specific time frame for sending of the email based on the time frame (priority level) of the consult request. The question prompting the request and unit and clinician name were automatically inserted in the form by the consult request database. Clinicians completed the forms by following a hypertext link embedded in the email. For consultation requests that had been randomized to “No CICS Provided” status, clinicians received the URL for the evaluation form in an email sent 5 days after making the consult request. The email was delayed to allow clinicians time to review and potentially act on the information located on their own. Clinicians in the “No CICS Provided” condition who did not do their own search could not complete this form as there was no evidence or search to rate.

Reminders to clinicians helped to prevent missing response data. If clinicians did not complete their post-consult rating form within 3 days, they received a second (reminder) email request to complete the work, with a URL link to the form. A final (third) email reminder was sent out 3 days from that date. If the form was not completed within 3 days from the last reminder, project staff paged clinicians or contacted them by telephone to ask them to fill out the form. Based on prior agreement, the Trauma unit clinicians were never paged to fill out research forms. The last measure to prevent missing follow-up data involved mailing a printed copy of the form to the clinician. To address potential clinician “fatigue” in completing forms, a $5 gift card incentive was provided for each completed form.

Behaviors assessed on the 10-item post-consult forms included time spent searching for evidence by the clinician and associates/trainees, alternate sources of information such as formal and informal consults to colleagues, overall immediate and future impact of the consult results (or their own searches if completed), clinical actions that were influenced by the consult, barriers to implementation of the consult results, consult quality, and overall satisfaction. Response options were either a Likert-type format (range of 5 response options), free text, or select any number of relevant response options (e.g. “check all that apply”).

Analytic Approach

The main outcomes were analyzed using an Intention to Treat (ITT) approach using the 2 randomized groups (CICS provided/No CICS provided). Thus, for these analyses, data were analyzed only by the randomization (No CICS Provided/CICS Provided) condition even if the clinician opted out of the “No CICS Provided” status. Clinicians were requested to indicate if they wanted to opt out of the randomization process before they knew the condition of their consult. However, clinicians did not always indicate their opt out preference prior to knowing their condition and their requests to opt out were always honored. Therefore, in analyses we assumed that this opt out occurred after knowing the experimental condition and all opt outs were coded as No CICS Provided consults. This provides the most conservative estimate of opt out effects by putting all of the opt out consults (all of which actually did receive results) into the No CICS Provided for ITT analyses. When clinicians did not receive CICS search results, they completed only a subset of the assessment questions that would be relevant for their own searches (e.g. no questions asked regarding the research summary).

The primary analytic approach related each randomized condition to outcomes (immediate and potential future clinical decisions, action index, and number of research articles read, satisfaction, colleague consults, and time spent searching), using random coefficients hierarchical models to control for the clustering of consults within clinicians on outcomes.35 If ratings within clinicians were correlated, traditional analysis of variance (ANOVA), assuming independence, might register specious significance. We used a two-level model in which each clinician's intercept (mean of their own ratings) controlled for multiple ratings by clinicians.

To measure the clinical effects of each CICS consultation, the project created an “action index” – the number of specific clinical actions influenced by consult results. Actions included the initiation, addition, change, discontinuation, or inhibition of any clinician-initiated event related to diagnosing or treating the patient. The index, a simple sum of the number of actions that clinicians reported as impacted by the consult.

A secondary analytic approach examined each experimental group was divided into two subgroups based on whether clinicians initiated their own evidence searches, resulting in 4 “evidence source” groups: No Evidence (neither librarian nor clinician completed a search), Clinician Only, CICS Librarian Only, or CICS Librarian + Clinician. Only three “evidence source” groups had outcomes data and could be analyzed using this type of analysis: Clinician, CICS Librarian, and Clinician + CICS Librarian.

Multi-level analyses were conducted with SAS PROC MIXED (v9); all other analyses were conducted with SPSS (v14).

Results

Program and Study Characteristics

Consults

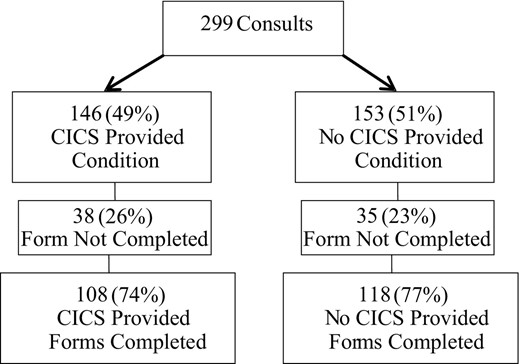

Figure 1 shows a study flow diagram. Over the study period of 19 months (08/2004–03/2006), participating study units generated 299 valid CICS consult requests with 226 completed forms. There were 91 (40.3%) consults from the MICU, 86 (38.1%) consults from the NICU, 40 (17.7%) from Trauma, and 9 (4.0%) from the PICU. A total of 10.2% (23/226) of consults were opt-outs. Reasons given for opting out of the randomization process included severe patient symptoms and need for support in searching for evidence. When examined by priority level, 2.4% (3/126) of the low priority, 15.9% (14/88) of the medium priority, and 50% (6/12) of the high priority consults were opt-outs. The MICU generated the most opt-out requests (20/23); with 22% of all MICU consults (20/91) opt-outs. Mean levels of all outcome variables were tested by opt-out status using ANOVA. No significant differences were found on any outcome variables between opt-outs (N = 23) and other consults.

There were 226 post-consult rating forms completed out of 299 consults, resulting in an overall 24% rate of absent consult rating data. Consult requests that did not have consult rating forms were eliminated from further analyses. To check for possible bias of results by missing forms, we calculated each clinician's percent missing based on their number of completed forms divided by their number of consult requests. The average MD had 26% missing records (SD 37%). We added each clinician's percent missing to the ITT hierarchical model and re-ran all 21 analyses shown in Table 1. All significant results in the first analysis replicated in the analysis that controlled for the clinician's number of missing values. These results suggest that missingness had little impact on the results.

Descriptives, Effect Sizes, and Significance of Outcome Differences by Experimental Group

| No CICS Provided | CICS Provided | |||||||||

| Variable* | N | Mean | Std Dev | N | Mean | Std Dev | Effect Size | Prob† (Clinician) | Prob (Lib) | Prob (Unit) |

| Immediate Impact | 46 | 2.74 | 1.29 | 103 | 2.87 | 1.19 | 0.11 | ns | ns | ns |

| Future Impact | 55 | 3.76 | 1.12 | 103 | 4.25 | 0.78 | 0.52 | <0.01 | <0.01 | <0.01 |

| Action Index†† | 58 | 0.33 | 0.63 | 108 | 0.82 | 1.37 | 0.42 | <0.05 | <0.05 | <0.05 |

| Proportion of Articles Read | 56 | 3.48 | 1.31 | 103 | 3.67 | 1.23 | 0.15 | ns | ns | ns |

| Satisfaction | 57 | 4.02 | 0.94 | 102 | 4.52 | 0.61 | 0.65 | <0.0001 | <0.001 | <0.001 |

| Colleague Consults | 41 | 1.32 | 0.69 | 32 | 1.25 | 0.62 | 0.10 | ns | ns | ns |

| Time Searching | 57 | 3.34 | 3.37 | 108 | 5.49 | 3.05 | 0.68 | <0.001 | <0.001 | <0.001 |

| No CICS Provided | CICS Provided | |||||||||

| Variable* | N | Mean | Std Dev | N | Mean | Std Dev | Effect Size | Prob† (Clinician) | Prob (Lib) | Prob (Unit) |

| Immediate Impact | 46 | 2.74 | 1.29 | 103 | 2.87 | 1.19 | 0.11 | ns | ns | ns |

| Future Impact | 55 | 3.76 | 1.12 | 103 | 4.25 | 0.78 | 0.52 | <0.01 | <0.01 | <0.01 |

| Action Index†† | 58 | 0.33 | 0.63 | 108 | 0.82 | 1.37 | 0.42 | <0.05 | <0.05 | <0.05 |

| Proportion of Articles Read | 56 | 3.48 | 1.31 | 103 | 3.67 | 1.23 | 0.15 | ns | ns | ns |

| Satisfaction | 57 | 4.02 | 0.94 | 102 | 4.52 | 0.61 | 0.65 | <0.0001 | <0.001 | <0.001 |

| Colleague Consults | 41 | 1.32 | 0.69 | 32 | 1.25 | 0.62 | 0.10 | ns | ns | ns |

| Time Searching | 57 | 3.34 | 3.37 | 108 | 5.49 | 3.05 | 0.68 | <0.001 | <0.001 | <0.001 |

For all but Action Index, responses could range from 1–5. All items were scaled so that higher scores indicated more impact, more articles read, or greater satisfaction.

Probabilities reflect the probability that the means between the 2 experimental groups are significantly different after controlling for clustering for Clinician, Librarian, or Unit.

Range for this computed variable was 0–2.

Descriptives, Effect Sizes, and Significance of Outcome Differences by Experimental Group

| No CICS Provided | CICS Provided | |||||||||

| Variable* | N | Mean | Std Dev | N | Mean | Std Dev | Effect Size | Prob† (Clinician) | Prob (Lib) | Prob (Unit) |

| Immediate Impact | 46 | 2.74 | 1.29 | 103 | 2.87 | 1.19 | 0.11 | ns | ns | ns |

| Future Impact | 55 | 3.76 | 1.12 | 103 | 4.25 | 0.78 | 0.52 | <0.01 | <0.01 | <0.01 |

| Action Index†† | 58 | 0.33 | 0.63 | 108 | 0.82 | 1.37 | 0.42 | <0.05 | <0.05 | <0.05 |

| Proportion of Articles Read | 56 | 3.48 | 1.31 | 103 | 3.67 | 1.23 | 0.15 | ns | ns | ns |

| Satisfaction | 57 | 4.02 | 0.94 | 102 | 4.52 | 0.61 | 0.65 | <0.0001 | <0.001 | <0.001 |

| Colleague Consults | 41 | 1.32 | 0.69 | 32 | 1.25 | 0.62 | 0.10 | ns | ns | ns |

| Time Searching | 57 | 3.34 | 3.37 | 108 | 5.49 | 3.05 | 0.68 | <0.001 | <0.001 | <0.001 |

| No CICS Provided | CICS Provided | |||||||||

| Variable* | N | Mean | Std Dev | N | Mean | Std Dev | Effect Size | Prob† (Clinician) | Prob (Lib) | Prob (Unit) |

| Immediate Impact | 46 | 2.74 | 1.29 | 103 | 2.87 | 1.19 | 0.11 | ns | ns | ns |

| Future Impact | 55 | 3.76 | 1.12 | 103 | 4.25 | 0.78 | 0.52 | <0.01 | <0.01 | <0.01 |

| Action Index†† | 58 | 0.33 | 0.63 | 108 | 0.82 | 1.37 | 0.42 | <0.05 | <0.05 | <0.05 |

| Proportion of Articles Read | 56 | 3.48 | 1.31 | 103 | 3.67 | 1.23 | 0.15 | ns | ns | ns |

| Satisfaction | 57 | 4.02 | 0.94 | 102 | 4.52 | 0.61 | 0.65 | <0.0001 | <0.001 | <0.001 |

| Colleague Consults | 41 | 1.32 | 0.69 | 32 | 1.25 | 0.62 | 0.10 | ns | ns | ns |

| Time Searching | 57 | 3.34 | 3.37 | 108 | 5.49 | 3.05 | 0.68 | <0.001 | <0.001 | <0.001 |

For all but Action Index, responses could range from 1–5. All items were scaled so that higher scores indicated more impact, more articles read, or greater satisfaction.

Probabilities reflect the probability that the means between the 2 experimental groups are significantly different after controlling for clustering for Clinician, Librarian, or Unit.

Range for this computed variable was 0–2.

Reasons for the Consults

When asked to endorse or list any and all reasons why a consult request was made to the CICS, the most common response was in order to “update myself or others” (65%, 147/226), followed by “complex case” (31.4%, 71/226), “confirm plan of action” (27.9%, 63/226), “unsure of next actions” (5.7%, 13/226), and “other” (10.6%, 24/226). The most common “other” option was for teaching purposes. Additional “other” responses included asking the question for journal club or for a research proposal.

Clinicians

There were 89 unique clinicians who completed rating forms. Of the 89 clinicians 39% (35/89) were attending physicians, 31% fellows (28/89), 28% residents (25/89), and 2% nurse practitioners (2/89). There were no differences in the percentage of each clinician type across the experimental conditions (Chi2 0.377, ns). The average number of consults per clinician was 2.96 (SD 3.17), with a mode of 1.00, and range of 1–15. The number of clinicians with more than 1 consult was 49. For the clinicians with greater than 1 consult, the average number of consults per clinician was 4.57 (SD 3.55); range 2–15.

Outcomes Using Hierarchical ITT Analyses

Impact on Patient Care Practices

Table 1 shows results for main outcomes using the multi-level model. Experimental results came from a model in which each clinician had a unique intercept to represent differences from other raters in each outcome. As described above, this clinician coefficient was necessary to control for the clustering of ratings in clinician.

Cohen's d effect size (the difference between two group means divided by the pooled standard errors for the groups) was used for outcomes data. Effect sizes are judged by Cohen's36 popular criteria (0.2 small/0.5 medium/0.8 large). Positive effect sizes generally suggest that CICS consults received higher impact ratings than those in the “No CICS provided” condition. For immediate impact of consults, the effect size was small (< 0.20 SDs). Clinicians in both conditions most frequently reported that they were “not sure” of the immediate impact of the evidence (“CICS provided”: 39%, 40/103; “No CICS provided”: 48%, 22/46). When asked if the evidence provided/learned had the potential to impact future patient care practices, “CICS provided” group clinicians gave greater ratings (medium effect size).

Action Index

Clinicians who rated librarian or clinician generated searches as having an impact were subsequently asked to indicate the clinical actions affected. The “action index” in Table 1 shows the difference of the sum of these actions across groups.

Articles Read

Analyses here include the actual number of articles found for consults in both conditions as well as the proportion of articles read of those found. Table 1 shows results for the proportion of articles read. The values reflect clinicians' responses to a 5-point question regarding the proportion of articles read (1 = 0%, 2 < 50%, 3 = ∼50%, 4 > 50%, 5 = 100%). The difference between the conditions for the proportion of articles read was not statistically significant. The mean number of articles found associated with “CICS provided” consults was 5.18 (SD 3.59, Range 0–17) and for the “No CICS provided” group, clinician-generated searching led to reading 6.70 articles per consult requested (SD 19.05, Range 0–100; Cohen's d effect size −0.11; F = 2.80, n.s.).

Satisfaction with Search

Table 1 shows ratings of overall clinician satisfaction with the results of the respective literature searches by condition. Clinicians in the CICS Provided condition rated satisfaction for consult results as significantly greater than the clinicians in the No CICS Provided condition. Clinicians in the CICS Provided condition rated both the overall satisfaction with the search and the summary on that item. Clinicians in the No CICS Provided condition rated their satisfaction with their own searches. In addition to overall satisfaction, clinicians rated two aspects of CICS generated evidence: summary clarity and summary completeness. The rating for summary clarity was 4.45 (SD 0.58; out of possible 5.0). Additionally, only CICS provided consults could be used to assess the extent to which clinicians felt that they needed to search for more articles after receiving (actual) consult results. The proportion of clinicians who reported that it was necessary to do more searching after receiving CICS results was 19.2% (25/130).

Use of Colleague Consults

Sample sizes for this analysis were limited to the number of clinicians who did searches. There were no differences between the experimental conditions on the frequency of formal or informal colleague consults either before or after making a consult request to the CICS. The proportion of clinicians who made formal colleague consults was 31.6% for the No CICS Provided condition and 33.6% for the CICS Provided condition. The mean number of colleague consults made before the CICS consult request was 1.32 (SD 0.65) for the No CICS Provided condition and 1.04 (SD 0.58) for the CICS Provided condition.

Table 2 shows specific actions post-consult that could be impacted and their rate of endorsement by condition. Using odds ratios, only the action of adding a new or different treatment was significantly different between the groups. Clinicians could endorse as many actions as needed, and the categories were not mutually exclusive. Two write-in “other” responses included “discharge planning” and “need for follow-up.” The “Influence others” category included responses related to influencing other clinicians on the team, influencing families/patients, or influencing other individuals who were involved in clinical decision making (e.g., nursing home).

Specific Actions Influenced Postconsult by Condition

| Clinical Action Influenced | No CICS N = 21% (Count) | CICS Provided N = 94% (Count) | Odds Ratio (95% CI) |

| Add diagnostic test | 14.3% (3) | 6.4% (6) | ns |

| Change diagnostic test | 0 (0) | 6.4 (6) | ns |

| Cancel diagnostic test | 4.8 (1) | 6.4 (6) | ns |

| Other diagnostic test | 0 (0) | 2.1 (2) | ns |

| Change drug dose | 0 (0) | 6.4 (6) | ns |

| Change drug | 9.5 (2) | 8.5 (8) | ns |

| Add drug | 0 (0) | 5.3 (5) | ns |

| Different or new treatment | 4.8 (1) | 14.9 (14) | 8.20 (1.04–64.00) |

| Duration of treatment | 19 (4) | 12.8 (12) | ns |

| Timing of treatment | 19 (4) | 11.7 (11) | ns |

| Stop treatment | 9.5 (2) | 9.6 (9) | ns |

| Add component of treatment | 14.3 (3) | 4.3 (4) | ns |

| Influence others | 4.8 (1) | 5.3 (5) | ns |

| Clinical Action Influenced | No CICS N = 21% (Count) | CICS Provided N = 94% (Count) | Odds Ratio (95% CI) |

| Add diagnostic test | 14.3% (3) | 6.4% (6) | ns |

| Change diagnostic test | 0 (0) | 6.4 (6) | ns |

| Cancel diagnostic test | 4.8 (1) | 6.4 (6) | ns |

| Other diagnostic test | 0 (0) | 2.1 (2) | ns |

| Change drug dose | 0 (0) | 6.4 (6) | ns |

| Change drug | 9.5 (2) | 8.5 (8) | ns |

| Add drug | 0 (0) | 5.3 (5) | ns |

| Different or new treatment | 4.8 (1) | 14.9 (14) | 8.20 (1.04–64.00) |

| Duration of treatment | 19 (4) | 12.8 (12) | ns |

| Timing of treatment | 19 (4) | 11.7 (11) | ns |

| Stop treatment | 9.5 (2) | 9.6 (9) | ns |

| Add component of treatment | 14.3 (3) | 4.3 (4) | ns |

| Influence others | 4.8 (1) | 5.3 (5) | ns |

Sample sizes represent the number of consults in each condition with this data.

Specific Actions Influenced Postconsult by Condition

| Clinical Action Influenced | No CICS N = 21% (Count) | CICS Provided N = 94% (Count) | Odds Ratio (95% CI) |

| Add diagnostic test | 14.3% (3) | 6.4% (6) | ns |

| Change diagnostic test | 0 (0) | 6.4 (6) | ns |

| Cancel diagnostic test | 4.8 (1) | 6.4 (6) | ns |

| Other diagnostic test | 0 (0) | 2.1 (2) | ns |

| Change drug dose | 0 (0) | 6.4 (6) | ns |

| Change drug | 9.5 (2) | 8.5 (8) | ns |

| Add drug | 0 (0) | 5.3 (5) | ns |

| Different or new treatment | 4.8 (1) | 14.9 (14) | 8.20 (1.04–64.00) |

| Duration of treatment | 19 (4) | 12.8 (12) | ns |

| Timing of treatment | 19 (4) | 11.7 (11) | ns |

| Stop treatment | 9.5 (2) | 9.6 (9) | ns |

| Add component of treatment | 14.3 (3) | 4.3 (4) | ns |

| Influence others | 4.8 (1) | 5.3 (5) | ns |

| Clinical Action Influenced | No CICS N = 21% (Count) | CICS Provided N = 94% (Count) | Odds Ratio (95% CI) |

| Add diagnostic test | 14.3% (3) | 6.4% (6) | ns |

| Change diagnostic test | 0 (0) | 6.4 (6) | ns |

| Cancel diagnostic test | 4.8 (1) | 6.4 (6) | ns |

| Other diagnostic test | 0 (0) | 2.1 (2) | ns |

| Change drug dose | 0 (0) | 6.4 (6) | ns |

| Change drug | 9.5 (2) | 8.5 (8) | ns |

| Add drug | 0 (0) | 5.3 (5) | ns |

| Different or new treatment | 4.8 (1) | 14.9 (14) | 8.20 (1.04–64.00) |

| Duration of treatment | 19 (4) | 12.8 (12) | ns |

| Timing of treatment | 19 (4) | 11.7 (11) | ns |

| Stop treatment | 9.5 (2) | 9.6 (9) | ns |

| Add component of treatment | 14.3 (3) | 4.3 (4) | ns |

| Influence others | 4.8 (1) | 5.3 (5) | ns |

Sample sizes represent the number of consults in each condition with this data.

Time Searching and Reviewing Evidence

The total time spent searching (in hours) and summarizing articles across the randomized groups was significantly different (CICS Provided 5.62, SD 3.09, N = 130; No CICS Provided 1.38, SD 1.48, N = 34; p < 0.001). The total number of hours reported included time spent seeking and summarizing evidence for the CICS Provided condition but only included time spent searching for evidence in the No CICS Provided condition.

Clinician Searches

A significantly greater percentage of clinicians reported seeking their own evidence within the CICS Provided condition (70.2%, 92/131) compared to the No CICS condition (36.8%, 35/95; Pearson Chi2 25.84, p < 0.001). There were no differences between those clinicians within the No CICS Provided condition who chose to do their own searches and those who did not pursue their own searches regarding gender, unit, role in unit, number of consults, reason for consult, or formal and informal colleague consults.

Reasons for No or Little Impact

If clinicians reported that the consult results or their own searches did not have an impact on their patient care practices, they were asked to endorse any and all reasons to explain the lack of impact. Table 3 shows reasons why clinicians rated consult impact as “not very much” or “not at all” by condition.

Reasons for No or Little Impact by Condition

| Reason for No or Little Impact | N = 46 No CICS Provided % (Count) | N = 54 CICS Provided % (Count) |

| Already doing the recommended | 34.8 (16) | 36.5 (19) |

| Not enough evidence | 21.7 (10) | 27 (14) |

| Not applicable to this patient | 13 (6) | 11.5 (6) |

| Poor quality evidence | 8.7 (4) | 7.7 (4) |

| Not enough time to implement | 4.3 (2) | 7.7 (4) |

| Poor quality literature search | 6.5 (3) | 1.9 (1) |

| Contradictory evidence | 2.2 (1) | 5.8 (3) |

| Cost | 2.2 (1) | 0 (0) |

| Staffing | 2.2 (1) | 0 (0) |

| Training | 2.2 (1) | 0 (0) |

| Information no longer needed | 0 (0) | 1.9 (1) |

| Family or patient decision | 2.2 (1) | 0 (0) |

| Equipment/technology | 0 (0) | 0 (0) |

| Reimbursement | 0 (0) | 0 (0) |

| Reason for No or Little Impact | N = 46 No CICS Provided % (Count) | N = 54 CICS Provided % (Count) |

| Already doing the recommended | 34.8 (16) | 36.5 (19) |

| Not enough evidence | 21.7 (10) | 27 (14) |

| Not applicable to this patient | 13 (6) | 11.5 (6) |

| Poor quality evidence | 8.7 (4) | 7.7 (4) |

| Not enough time to implement | 4.3 (2) | 7.7 (4) |

| Poor quality literature search | 6.5 (3) | 1.9 (1) |

| Contradictory evidence | 2.2 (1) | 5.8 (3) |

| Cost | 2.2 (1) | 0 (0) |

| Staffing | 2.2 (1) | 0 (0) |

| Training | 2.2 (1) | 0 (0) |

| Information no longer needed | 0 (0) | 1.9 (1) |

| Family or patient decision | 2.2 (1) | 0 (0) |

| Equipment/technology | 0 (0) | 0 (0) |

| Reimbursement | 0 (0) | 0 (0) |

Sample sizes represent the number of consults in each condition with this data.

Reasons for No or Little Impact by Condition

| Reason for No or Little Impact | N = 46 No CICS Provided % (Count) | N = 54 CICS Provided % (Count) |

| Already doing the recommended | 34.8 (16) | 36.5 (19) |

| Not enough evidence | 21.7 (10) | 27 (14) |

| Not applicable to this patient | 13 (6) | 11.5 (6) |

| Poor quality evidence | 8.7 (4) | 7.7 (4) |

| Not enough time to implement | 4.3 (2) | 7.7 (4) |

| Poor quality literature search | 6.5 (3) | 1.9 (1) |

| Contradictory evidence | 2.2 (1) | 5.8 (3) |

| Cost | 2.2 (1) | 0 (0) |

| Staffing | 2.2 (1) | 0 (0) |

| Training | 2.2 (1) | 0 (0) |

| Information no longer needed | 0 (0) | 1.9 (1) |

| Family or patient decision | 2.2 (1) | 0 (0) |

| Equipment/technology | 0 (0) | 0 (0) |

| Reimbursement | 0 (0) | 0 (0) |

| Reason for No or Little Impact | N = 46 No CICS Provided % (Count) | N = 54 CICS Provided % (Count) |

| Already doing the recommended | 34.8 (16) | 36.5 (19) |

| Not enough evidence | 21.7 (10) | 27 (14) |

| Not applicable to this patient | 13 (6) | 11.5 (6) |

| Poor quality evidence | 8.7 (4) | 7.7 (4) |

| Not enough time to implement | 4.3 (2) | 7.7 (4) |

| Poor quality literature search | 6.5 (3) | 1.9 (1) |

| Contradictory evidence | 2.2 (1) | 5.8 (3) |

| Cost | 2.2 (1) | 0 (0) |

| Staffing | 2.2 (1) | 0 (0) |

| Training | 2.2 (1) | 0 (0) |

| Information no longer needed | 0 (0) | 1.9 (1) |

| Family or patient decision | 2.2 (1) | 0 (0) |

| Equipment/technology | 0 (0) | 0 (0) |

| Reimbursement | 0 (0) | 0 (0) |

Sample sizes represent the number of consults in each condition with this data.

The most common reasons for no impact on patient care practices across the two conditions were ‘already doing what was recommended’ or ‘there was not enough evidence.’

Evidence Source Analyses

The results presented in the previous section examined clinicians across the two experimental groups. The following analyses categorize the two groups into four groups based solely on the presence or absence of a CICS-generated evidence and/or clinician-initiated evidence, regardless of randomization condition, as shown in Table 4. Based on consideration of clinician evidence searches, the following evidence source groups were created: None (N = 60) in which the consult had no CICS generated evidence and clinicians did not complete their own searches, Clinician Only (N = 35) in which only the clinician completed an evidence search, Librarian Only (N = 39) in which only the librarian completed a search, and Librarian and Clinician (N = 92) in which both the librarian and clinician completed searches. The “None” group contained no data and so was not used in analyses.

Frequencies of Clinician and Librarian Searches

| Randomization Condition | Evidence Source Conditions | Frequency (N) | Percent of Condition | Percent of Total Consults |

| No CICS Provided (N = 95) | 1 No evidence | 60 | 63 (60/95) | 27 (60/226) |

| 2 Clinician | 35 | 37 (35/95) | 15 (35/226) | |

| CICS Provided (N = 131) | 3 Librarian | 39 | 30 (39/131) | 17 (39/226) |

| 4 Librarian + Clinician | 92 | 70 (92/131) | 41 (92/226) |

| Randomization Condition | Evidence Source Conditions | Frequency (N) | Percent of Condition | Percent of Total Consults |

| No CICS Provided (N = 95) | 1 No evidence | 60 | 63 (60/95) | 27 (60/226) |

| 2 Clinician | 35 | 37 (35/95) | 15 (35/226) | |

| CICS Provided (N = 131) | 3 Librarian | 39 | 30 (39/131) | 17 (39/226) |

| 4 Librarian + Clinician | 92 | 70 (92/131) | 41 (92/226) |

Frequencies of Clinician and Librarian Searches

| Randomization Condition | Evidence Source Conditions | Frequency (N) | Percent of Condition | Percent of Total Consults |

| No CICS Provided (N = 95) | 1 No evidence | 60 | 63 (60/95) | 27 (60/226) |

| 2 Clinician | 35 | 37 (35/95) | 15 (35/226) | |

| CICS Provided (N = 131) | 3 Librarian | 39 | 30 (39/131) | 17 (39/226) |

| 4 Librarian + Clinician | 92 | 70 (92/131) | 41 (92/226) |

| Randomization Condition | Evidence Source Conditions | Frequency (N) | Percent of Condition | Percent of Total Consults |

| No CICS Provided (N = 95) | 1 No evidence | 60 | 63 (60/95) | 27 (60/226) |

| 2 Clinician | 35 | 37 (35/95) | 15 (35/226) | |

| CICS Provided (N = 131) | 3 Librarian | 39 | 30 (39/131) | 17 (39/226) |

| 4 Librarian + Clinician | 92 | 70 (92/131) | 41 (92/226) |

To see how the evidence source conditions related to the main outcomes, we repeated the random coefficients model using the evidence source groups (Table 5). After examining differences between CICS Provided and No CICS conditions, we examined the impact of the consult results by search source groups. The clinician search group was treated as a comparison, to test against librarian only and librarian plus clinician searches.

Standardized Beta Coefficients and Standard Errors for Comparisons of Outcomes across Evidence Source Conditions

| Evidence Source | Immediate Impact | Future Impact | Post Action Index | Articles Read | Satisfaction |

| Librarian + Clinician | 0.45 (0.25) | 0.81 (0.17)† | 0.57 (.22)* | 0.32 (0.24) | 0.87 (0.14)† |

| Librarian Only | −0.24 (0.30) | 0.68 (0.21)† | 0.20 (.22) | −0.06 (0.30) | 0.85 (0.17)† |

| Clinician Only | Comp. | Comp. | Comp. | Comp. | Comp. |

| Evidence Source | Immediate Impact | Future Impact | Post Action Index | Articles Read | Satisfaction |

| Librarian + Clinician | 0.45 (0.25) | 0.81 (0.17)† | 0.57 (.22)* | 0.32 (0.24) | 0.87 (0.14)† |

| Librarian Only | −0.24 (0.30) | 0.68 (0.21)† | 0.20 (.22) | −0.06 (0.30) | 0.85 (0.17)† |

| Clinician Only | Comp. | Comp. | Comp. | Comp. | Comp. |

p < 0.05.

p < 0.001. Comp = Comparison group.

Beta standard errors are in parentheses and asterisks show the significance of these differences using a Wald test.

Standardized Beta Coefficients and Standard Errors for Comparisons of Outcomes across Evidence Source Conditions

| Evidence Source | Immediate Impact | Future Impact | Post Action Index | Articles Read | Satisfaction |

| Librarian + Clinician | 0.45 (0.25) | 0.81 (0.17)† | 0.57 (.22)* | 0.32 (0.24) | 0.87 (0.14)† |

| Librarian Only | −0.24 (0.30) | 0.68 (0.21)† | 0.20 (.22) | −0.06 (0.30) | 0.85 (0.17)† |

| Clinician Only | Comp. | Comp. | Comp. | Comp. | Comp. |

| Evidence Source | Immediate Impact | Future Impact | Post Action Index | Articles Read | Satisfaction |

| Librarian + Clinician | 0.45 (0.25) | 0.81 (0.17)† | 0.57 (.22)* | 0.32 (0.24) | 0.87 (0.14)† |

| Librarian Only | −0.24 (0.30) | 0.68 (0.21)† | 0.20 (.22) | −0.06 (0.30) | 0.85 (0.17)† |

| Clinician Only | Comp. | Comp. | Comp. | Comp. | Comp. |

p < 0.05.

p < 0.001. Comp = Comparison group.

Beta standard errors are in parentheses and asterisks show the significance of these differences using a Wald test.

Table 5 shows standardized beta coefficients comparing the Librarian + Clinician and Librarian Only conditions against the Clinician Only condition. Coefficients represent the difference between the Clinician Only group compared to the reference group listed. Thus, negative coefficients indicate a lower coefficient for the reference group compared to the Clinician Only group. Significant effects were found for future impact and satisfaction. For future impact, Librarian + Clinician and Librarian Only were both statistically significantly higher than Clinician Only (p < 0.001). A follow-up comparison of future impact to determine whether there was a difference between Librarian + Clinician and Librarian Only conditions revealed that the difference between these conditions was statistically significant (p = 0.02). This difference suggests that the clinician searches had an additional increased effect on future impact beyond the librarian searches alone.

Satisfaction ratings in Librarian + Clinician and Librarian Only conditions were higher than for Clinician Only. Satisfaction ratings here are the same as in previous analyses: a rating of the satisfaction with the search results for either condition. The Librarian + Clinician condition had a satisfaction rating of 0.87 + 0.14 standardized points higher than the Clinician Only condition. For Librarian Only versus Clinician Only conditions, the difference was almost exactly the same (0.85 + 0.17 points). Both of these comparisons were significant at p < 0.001.

Time Searching by Evidence Source Groups

There was a statistically significant difference in total time spent across the evidence source groups. Again, the two groups here associated with the CICS Provided consults includes time that the librarian spent formally summarizing research. The mean time in hours for the groups were 1.38 (SD 1.48) for the Clinician Only group, 4.00 (SD 1.61) for the Librarian Only group, and 6.32 (SD 2.33) for the Librarian + Clinician group.

Discussion

The Clinical Informatics Consult Service (CICS) bridges the gap between clinicians' current knowledge, knowledge generated by research reported in the biomedical literature, and patient care practices. The CICS model attempts to minimize barriers to accessing, evaluating, and utilizing research evidence. The CICS model employs specially trained librarians who participate as members of clinical teams, obtaining first-hand knowledge of clinician-initiated evidence “consult requests” in order to provide more relevant and focused research articles and librarian-generated summaries of the research. While many informatics services attempt to bridge the knowledge-action gap,14–20 the Eskind Biomedical Library CICS program is somewhat unique in providing non-clinician librarians with background expertise for locating and summarizing patient-specific, relevant research evidence.

While many prior informatics interventions focused on implementation of guidelines or clinical practice goals through technology-driven contextual prompts and feedback14,16,17 and defined clinical behaviors as outcomes, the current CICS study assessed the use of evidence across clinical units, medical conditions, and clinicians. Each CICS consult request resulted in a unique configuration of questions, evidence, and patient care parameters. Even with a multitude of unique situations across the consults, the CICS program showed an influence on immediate specific clinical actions, with more actions taken after completed CICS consults than when consult results were not provided. CICS consult results impacted all types of clinical actions, but especially the addition of a new or different treatment. Somewhat paradoxically, when responding to a single question assessing overall impact, clinicians typically did not rate the consult results as having an immediate effect on patient care. This might be explained by the single question about immediate impact preceding the “action index” question on the survey. The action index question required explicit endorsement of actions influenced by the results of CICS or clinicians' own searches and prompted more and more accurate information, and if it had preceded the more general question, may have resulted in a higher rating of overall impact.

While current study results indicate that CICS librarians' summaries of research had a greater impact based on number of clinical actions endorsed and potential future impact than the bibliographic searches by clinicians (see Results Table 5), the specific nature and magnitude of the effect of the CICS syntheses is unclear because clinicians read similar numbers of articles across the two conditions. Determining the specific effect of the syntheses would entail multiple content experts rating each of the consult results—a process that was not feasible in the present study. Previous research showed that methodologically trained clinicians and librarians make similar article selections for a given clinical question.37 This result suggests that CICS research summaries may have been the primary mediator of the current study's impact.

Surprisingly, we found that clinicians completed more of their own searches in the “CICS provided” condition compared to the “No CICS provided” condition. It may be that CICS results prompted clinicians' curiosity and thus may serve as a spur to information seeking by clinicians. Research also indicates that physicians regularly encounter multiple clinical questions in their daily practice,38–42 yet many questions are never pursued.38,43–46 This finding raises the question of what clinicians did in the absence of evidence and why some clinical questions were pursued. The most common reason for making a consult request was to update oneself or others. It may have been that in the “No CICS provided” condition, clinicians did not pursue information needs when the consult request related to an educational-type question. Because of the randomized design, (and post-hoc assessment of differences in clinician characteristics across the conditions), differential levels of clinician-initiated searches cannot be attributed to demographic differences. It is unknown at what point in the consult process clinicians completed their own searches. The number of clinician-initiated searches in the “CICS Provided” condition suggests that time may not be as much of a barrier to locating evidence as has been shown.8–10,12 However, generalizability of that inference is limited as this study was conducted in an academic teaching hospital in which housestaff time may have been used, to some extent, for literature searches.

Both “CICS Provided” and “No CICS Provided” clinician-subjects, once they completed literature searches, reported similar relative rankings for reasons for the lack of knowledge implementation: 1) the patient's clinicians had already implemented what the literature recommended, 2) too little evidence was available to guide decision-making, and 3) available evidence did not apply to the current patient. By comparison, the most commonly reported barriers to guideline implementation—lack of skills, lack of resources, and counterproductive reimbursement policies3,11,47—were not mentioned as significant barriers in the present study. Generalizability of the barriers reported in this study touch upon multiple ongoing organizational programs and initiatives designed to positively influence evidence based practices at Vanderbilt. The inability to control related or potentially confounding environmental influences creates an inherent limitation in the interpretation of effectiveness trials. Additional analyses examined the primary outcomes as determined by the source of evidence (clinician or librarian or both). Both CICS librarian-only and CICS librarian + clinician searches were rated as more impactful on future clinical actions and were rated higher in terms of satisfaction compared to the clinician-only searches. The greatest impact on clinical actions occurred when evidence was derived from both the clinician and the CICS librarian, again suggesting a potentially synergistic effect of CICS.

The current study was limited by several factors. The primary limitation, by design, was failure to examine directly the relationship between CICS consultations and patient outcomes. Such a study would require significant resources given the multitude of variables and causal paths. Study measurements relied primarily on clinicians' reports. More objective measurement of clinical actions might enhance study reliability and validity. Consults were also randomized by priority level, and the study may have benefited from randomizing by unit instead of priority level to ensure equal numbers of CICS Provided results across units. Additionally, measurement of librarian skill or expertise would have allowed direct reporting of that factor in relation to outcomes. Generalizability of the results might also be limited by the non-random selection of critical care units, because there may be contextual and cultural differences in the use and impact of research evidence across disciplines.3 The working relationship, or trust, between librarians and clinicians was not explicitly measured. However, we believe that the high level of confidence in and satisfaction with the CICS suggests that trust was present. Finally, we do not view the inclusion of less experienced trainees (fellows and residents) as a limitation of the current study. Inclusion of residents and fellows was critical to the feasibility, completion, and generalizability of the study, given that housestaff are the most common health care providers in academic critical care units.

Conclusions

The current study found that a librarian-mediated clinical informatics evidence-delivery program facilitated use of research evidence and influenced clinical actions reported by clinicians. Future research on informatics services should establish the conditions under which clinicians are willing to significantly share responsibility and potential clinical and resources costs with non-clinicians for patient-critical evidence assimilation and utilization. A key success factor for the CICS program was thought to be the specialized clinical and research training of CICS librarians received prior to their integration into the clinical care teams. Although debate continues regarding the future of evidence based medicine,48,49 programs such as the CICS can play a role in bridging the gap between what is known in the literature and what is implemented at the bedside.

References

Research Supported by NLM 5 R01 LM07849 to N. Giuse, (PI).